Are you tired of laggy graphics while playing games or rendering videos on your computer? One solution to this problem is setting your graphics card as the default option instead of relying on the built-in graphics card on your motherboard. This may sound complicated, but fear not, as we have a simple guide to help you set up your graphics card as the default option. By the end of this article, you’ll have a better understanding of what graphics cards are, why they matter, and how to set them up as the primary option for maximum performance.

So, let’s get started!

Why You May Need to Set Your Graphics Card as Default

Are you experiencing poor graphics quality or slow processing speeds on your computer while gaming or running high-performance applications? It may be time to consider setting your graphics card as the default. By doing so, you ensure that your computer’s dedicated graphics card is being used, rather than relying on the integrated graphics of your motherboard. This can result in improved graphics performance, smoother gameplay, and faster application processing times.

Without setting your graphics card as default, your computer may not be utilizing its full potential. Don’t let your hardware go to waste, take the time to adjust your settings and optimize your computer for the best performance possible.

Poor Graphics Performance

If you’re experiencing poor graphics performance on your computer, it could be due to your graphics card not being set as the default. By setting your graphics card as the default, it can significantly improve the display quality of your games, videos, and other graphics-intensive applications. Without a dedicated graphics card, your computer’s CPU has to work harder to render the graphics, resulting in choppy gameplay, slow video playback, and overall poor performance.

By making the graphics card the default, it reduces the workload on the CPU, allowing for smoother and more efficient processing. So if you want an enhanced gaming and viewing experience, setting your graphics card as the default is the way to go.

Games Not Running Smoothly

If you’re a gamer, you probably understand the frustration of having your games not running smoothly. In these moments, you may need to set your graphics card as default. When you start up a game, your computer uses the default integrated graphics card that comes with your CPU.

However, this card may not be powerful enough to smoothly run newer games with high graphics demands. By setting your graphics card as default, you’ll be ensuring that your computer is using the full power of your graphics card, resulting in better performance when playing games. It’s important to note that this process can vary depending on your operating system, but a quick Google search can walk you through the steps.

So, if you’re tired of choppy gameplay and want to get the best gaming experience possible, consider setting your graphics card as default.

Checking Your Graphics Card

Are you experiencing sluggish performance when running your favorite games or programs? It’s possible that your default graphics card is not being used. To check which graphics card your system is currently using, go to your computer’s Device Manager, expand the Display Adapters category, and see if your dedicated graphics card or integrated graphics is being used. If your integrated graphics is being used, you can set your dedicated graphics card as the default by going to your graphics card control panel and specifying it as the primary graphics card.

This will improve your system’s performance and allow you to enjoy your favorite games and programs without any lags or slowdowns. Don’t let a simple configuration issue hold you back from reaching your full potential – set your graphics card as the default and unlock the power of your system today!

Opening Device Manager

If you’re experiencing issues with your PC’s graphics performance, checking your graphics card is a crucial first step. Thankfully, it’s a relatively simple process. One way to do this is to open up Device Manager on your Windows computer.

To do this, simply press the Windows key and the X key at the same time. This will bring up a menu of options, including Device Manager. Clicking on Device Manager will open up a list of all the hardware on your PC, including your graphics card.

Look for the “Display Adapters” option and expand it to see your graphics card listed. From here, you can check the name of your graphics card and its driver version. This information can be useful when troubleshooting graphics-related issues or determining whether your graphics card is up to date.

By regularly checking your graphics card this way, you’ll be able to stay on top of any potential issues and ensure your computer is performing at its best.

Finding Your Graphics Card

If you’re a computer user, you might wonder what graphics card you have in your machine. Checking your graphics card is essential, especially if you want to play modern games or perform other graphics-intensive tasks. Finding your graphics card is a simple process, and there are several methods to do it.

The easiest and fastest way to check your graphics card is through your operating system. For Windows users, you can type “DXdiag” in the search bar, which will bring up DirectX Diagnostic Tool. Here, you can see the graphics card information, including the manufacturer, model, and driver version.

For Mac users, you can click on the apple logo on the top left corner, then click on “About This Mac,” and from the display window, click “System Report” to see your graphics card information. Knowing the graphics card on your computer is vital as it helps you ensure whether your PC’s specifications satisfy software requirements and whether it can handle specific tasks.

Setting Your Graphics Card as Default

If you’re a gamer or a digital creator, having a dedicated graphics card can make a huge difference in the quality of your work or play. However, sometimes your computer may default to using the integrated graphics card instead of the more powerful dedicated one. If this is the case, you’ll want to set your graphics card as default.

The process varies depending on your computer and graphics card, but typically involves accessing your computer’s BIOS settings or your graphics card’s control panel. By setting your graphics card as default, you’ll enjoy better performance and smoother playback while gaming or designing. So, if you’re ready to take your digital experience to the next level, try setting your graphics card as default and see the difference it can make.

Opening Graphics Settings

One of the most critical aspects of optimizing your gaming experience is ensuring that your graphics card is set as the default for your computer. This setting can help boost your computer’s performance, making your games run smoother and faster. To ensure that your graphics card is set as the default, you’ll need to access your graphics settings, typically found in your computer’s Control Panel.

From there, you can select your graphics card as the default and adjust your settings to your liking. By taking this step, you’ll be able to enjoy your games at their highest quality and get the most out of your computer’s capabilities. Remember, optimizing your graphics settings can make all the difference in your gaming experience, so don’t overlook this crucial step.

Selecting Default Graphics Card

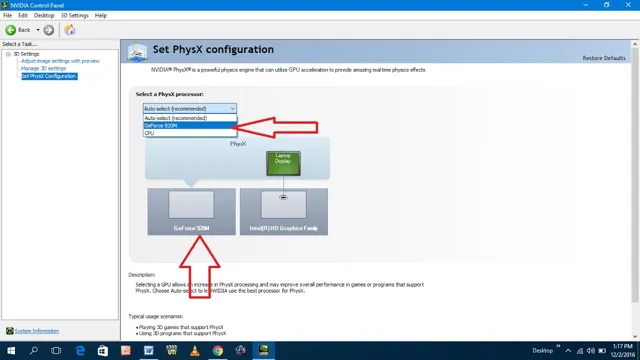

If you’re using a computer with multiple graphics cards, it’s important to ensure that your main graphics card is set as the default. This will ensure that all applications and games use the most powerful graphics card, providing the best possible performance. To set your default graphics card, start by opening the graphics control panel for your graphics card.

From there, locate the option to set your main graphics card as the default, and select it. You may also be able to set specific applications to use specific graphics cards if you prefer. Overall, taking a few minutes to set your default graphics card can make a big difference in the quality of your computer’s performance.

Testing Your Default Graphics Card

If you’re experiencing performance issues with your graphics-intensive applications, it may be time to set your graphics card as the default. This will ensure that your computer harnesses the full potential of your graphics card, resulting in better graphics and faster performance. To test your default graphics card, right-click on your desktop and select “Graphics Properties” or “AMD/NVIDIA Control Panel.

” From there, you can check which graphics card is set as your default and make changes if necessary. It’s recommended to select the card with the most power and capabilities, such as a dedicated GPU, as your default graphics card. By doing so, you’ll notice a significant improvement in your computer’s performance and overall user experience.

Don’t let your default graphics card hold you back – take control and optimize your computer for optimum performance today.

Running Benchmark Tests

If you’re a gamer or graphic designer, then you know how crucial it is to have a high-performing graphics card. However, if you’re not sure how well your default graphics card is performing, running benchmark tests can help you determine its speed and overall capabilities. Testing your default graphics card will give you an idea of its strengths and weaknesses, helping you identify whether it’s time for an upgrade.

Most benchmark tests are easy to run and can be done for free, making it a quick and affordable way to evaluate your computer’s graphics card. By performing these tests, you’ll be able to determine whether your graphics card is meeting the demands of your games or design software, and whether it’s time for an upgrade.

Playing Games

Playing GamesIf you’re an avid gamer, you know that the performance of your graphics card can make or break your gaming experience. However, have you ever stopped to consider if you’re using the default graphics card that came with your computer? It’s possible that your computer is using the integrated graphics from your processor, which may not be sufficient for high-end games. That’s why it’s important to test your default graphics card to ensure it’s up to par.

You can do this by running benchmark tests or testing it out with some of your favorite games. By doing so, you’ll be able to determine if you need to upgrade your graphics card to fully enjoy your gaming experience. Remember, the right graphics card can enhance the visuals and performance of your games, making them more enjoyable overall.

Final Thoughts

Setting your graphics card as default can be a bit tricky, but it’s definitely worth it if you want to get the best performance out of your PC. By default, your computer will use its integrated graphics card (usually found on the motherboard) to handle graphics-intensive tasks. However, if you have a dedicated graphics card installed, you’ll want to set it as the default option.

This can be done by going into your computer’s BIOS settings and changing the “display adapter” option to the name of your dedicated graphics card. Once you’ve done this, you should notice a significant improvement in the performance of graphics-intensive applications and games. So if you’re looking to get the most out of your PC, it’s definitely worth taking the time to set your graphics card as default.

Conclusion

In conclusion, setting your graphics card as default is like designating a superhero as the leader of your Avengers squad. You need someone powerful, efficient, and reliable to make the most out of your computing experience. So don’t settle for the weak and feeble integrated graphics, give your machine the boost it deserves by giving your graphics card the helm.

With great GPU power comes great computing responsibility – but trust us, it’s worth it.”

FAQs

How to set graphics card as default on Windows 10?

To set the graphics card as default on Windows 10, follow these steps:

1. Right-click on the desktop and select “Display settings.”

2. Scroll down and click on “Graphics settings.”

3. Under “Choose an app to set preference,” click on “Classic app.”

4. Click on “Browse” and select the app/game you want to use with the dedicated graphics card.

5. Click on “Options.”

6. Select “High performance” and click on “Save.”

How do I know which graphics card is my default?

To know which graphics card is your default, follow these steps:

1. Right-click on the desktop and select “Display settings.”

2. Scroll down and click on “Graphics settings.”

3. Under “Graphics performance preference,” you will see which graphics card is set as default.

4. If you have both integrated and dedicated graphics card, you can change the default graphics card by following the steps mentioned in Q1.

Will setting the graphics card as default improve my gaming performance?

Yes, setting the graphics card as default can improve your gaming performance if you have a dedicated graphics card. Integrated graphics card uses the CPU to process graphics, which can affect gaming performance. By setting the dedicated graphics card as default, your games will run smoother and with better graphics.

Why is my dedicated graphics card not being used as default?

There can be various reasons why your dedicated graphics card is not being used as default, such as outdated drivers, incorrect BIOS settings, or outdated operating system. To solve this issue, you can update your drivers, check BIOS settings, or contact the manufacturer for further assistance.